Semaphores

One can use semaphores as both locks and condition variables.

A semaphore is an object with an integer value that we can manipulate with two routines; in the POSIX standard, these routines are sem wait() and sem post()1 . Because the initial value of the semaphore determines its behaviour, before calling any other routine to interact with the semaphore, we must first initialize it to some value,

We will now focus on how to use these primitives;

1 int sem_wait(sem_t *s) {

2 decrement the value of semaphore s by one

3 wait if value of semaphore s is negative

4 }

5

6 int sem_post(sem_t *s) {

7 increment the value of semaphore s by one

8 if there are one or more threads waiting, wake one

9 }

we can see that sem.wait() will either return right away (because the value of the semaphore was one or higher when we called sem wait()), or it will cause the caller to suspend execution waiting for a subsequent post. Of course, multiple calling threads may call into sem wait(), and thus all be queued waiting to be woken

we can see that sem post() does not wait for some particular condition to hold like sem wait() does. Rather, it simply increments the value of the semaphore and then, if there is a thread waiting to be woken, wakes one of them up.

the value of the semaphore, when negative, is equal to the number of waiting threads

Don’t worry (yet) about the seeming race conditions possible within the semaphore; assume that the actions they make are performed atomically. We will soon use locks and condition variables to do just this.

31.2 Binary Semaphores (Locks)

We are now ready to use a semaphore. Our first use will be one with which we are already familiar with: using a semaphore as a lock.

sem_t m;

sem_init( & m, 0, X); // initialize to X; what should X be?

sem_wait( & m);

// critical section here

sem_post( & m);

int sem_wait(sem_t * s) {

decrement the value of semaphore s by one

wait

if value of semaphore s is negative

}

int sem_post(sem_t * s) {

increment the value of semaphore s by one

if there are one or more threads waiting, wake one

}

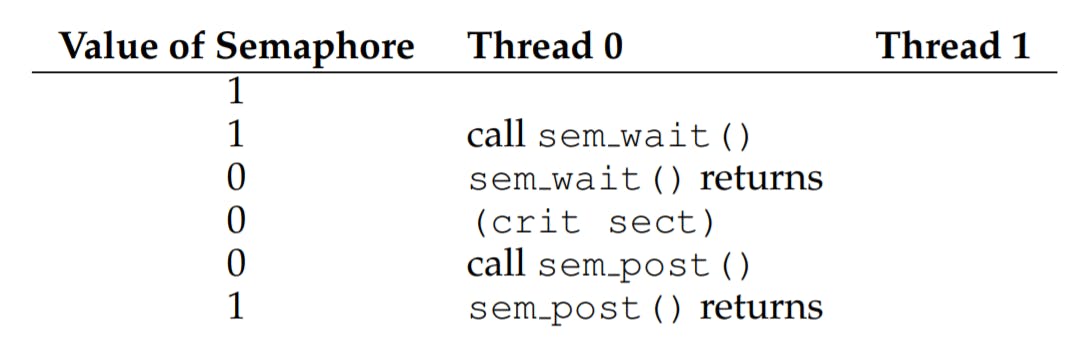

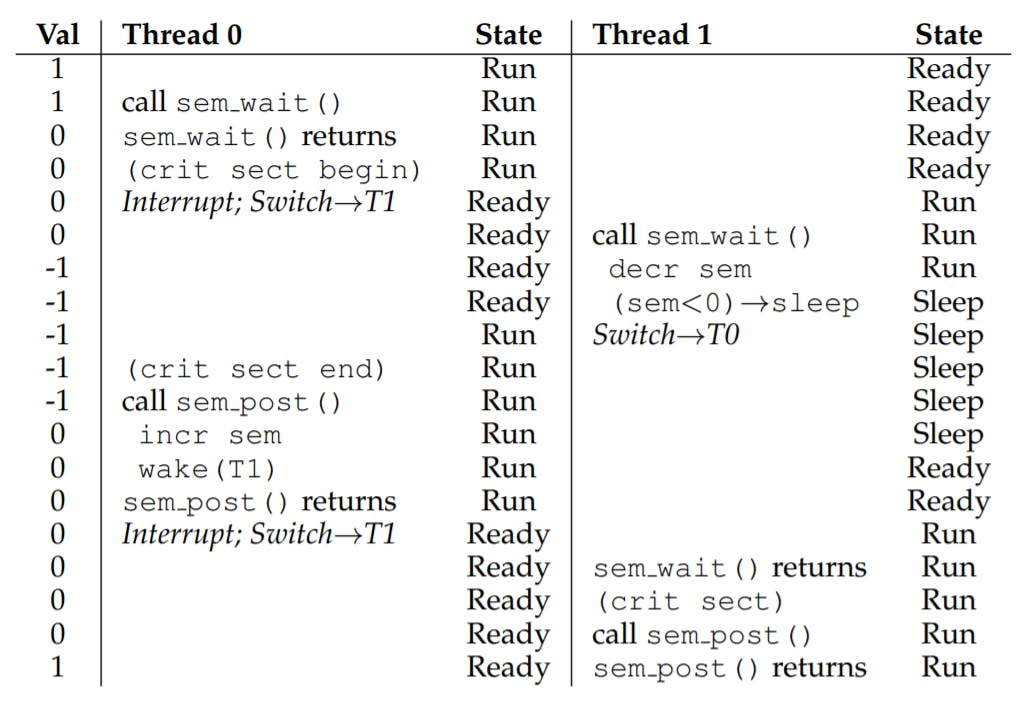

you’ll see that we simply surround the critical section of interest with a sem wait()/sem post() pair. Critical to making this work, though, is the initial value of the semaphore m (initialized to X in the figure). What should X be?

we can see that the initial value should be 1

Why is that?

We can say that the absolute number of a non-positive counter will be the threads that wait for the lock to be available, starting from value 1 means that you allow one thread to enter let's denote this thread as t1 when t1 will call wait will decrement the counter and it will be 0(which is non-positive) and now the absolute value of the counter(which is 0) means how many threads waiting, so if t2 try to enter the critical section by first calling wait(), it will decrement the counter again, the counter will be -1 which means |-1| (which is 1) thread/s are waiting for this lock.

This trace for threads interrupted each other to assure that no data race will happen

Semaphores For Ordering

Semaphores are also useful to order events in a concurrent program. For example, a thread may wish to wait for a list to become non-empty so it can delete an element from it

we often find one thread waiting for something to happen, and another thread making that something happens and then signalling that it has happened

We are thus using the semaphore as an ordering primitive (similar to our use of condition variables earlier)

sem_t s;

void * child(void * arg) {

printf("child\n");

sem_post( & s); // signal here: child is done

return NULL;

}

int main(int argc, char * argv[]) {

sem_init( & s, 0, X); // what should X be?

printf("parent: begin\n");

pthread_t c;

Pthread_create( & c, NULL, child, NULL);

sem_wait( & s); // wait here for child

printf("parent: end\n");

return 0;

}

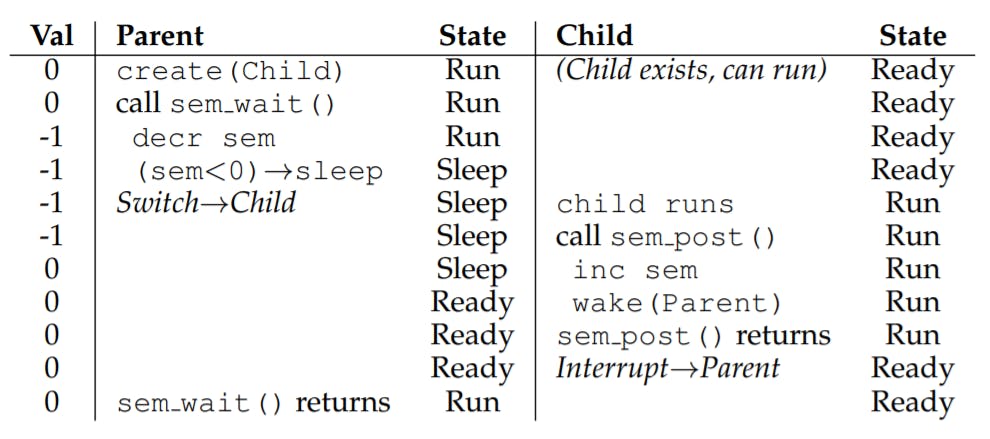

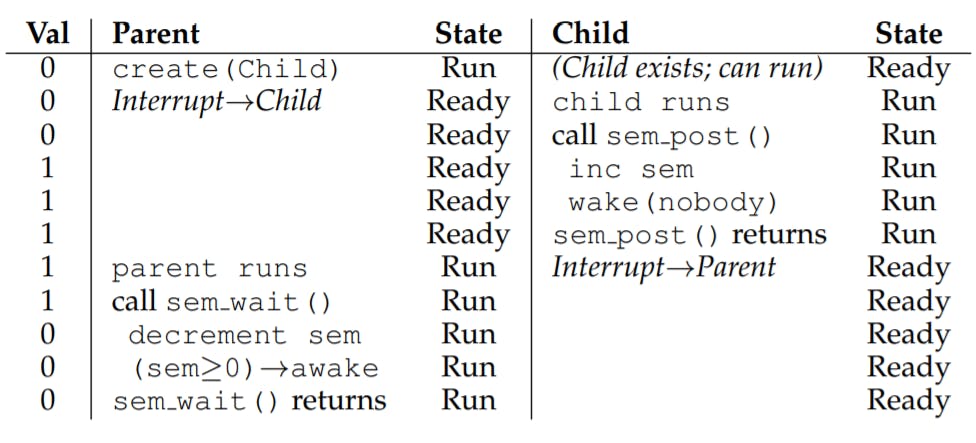

There are 2 cases

First case another case will be ready for run but no interrupts happen and then continue running in main until we hit wait() which will decrement the value of the counter, so counter now will be -1 which we know that |-1| threads are waiting so we have to go to sleep until the other thread will call post incrementing the counter and the counter will be non-negative and wake this thread

The second case occurs when the child runs to completion before the parent gets a chance to call sem wait(). the child will first call sem post(), thus incrementing the value of the semaphore from 0 to 1. When the parent then gets a chance to run, it will call sem wait() and find the value of the semaphore to be 1; the parent will thus decrement the value (to 0) and return from sem wait() without waiting

tip:

One simple way to think about it is to consider the number of resources you are willing to give away immediately after initialization.

With the lock, it was 1, because you are willing to have the lock locked (given away) immediately after initialization.

With the ordering case, it was 0, because there is nothing to give away at the start; only when the child thread is done is the resource created, at which the point, the value is incremented to 1.

31.4 The Producer/Consumer (Bounded Buffer) Problem

Our first attempt at solving the problem introduces two semaphores, empty and full, which the threads will use to indicate when a buffer entry has been emptied or filled, respectively.

int buffer[MAX];

int fill = 0;

int use = 0;

void put(int value) {

buffer[fill] = value; // Line F1

fill = (fill + 1) % MAX; // Line F2

}

int get() {

int tmp = buffer[use]; // Line G1

use = (use + 1) % MAX; // Line G2

return tmp;

}

sem_t empty;

sem_t full;

void * producer(void * arg) {

int i;

for (i = 0; i < loops; i++) {

sem_wait( & empty); // Line P1

put(i); // Line P2

sem_post( & full); // Line P3

}

}

void * consumer(void * arg) {

int tmp = 0;

while (tmp != -1) {

sem_wait( & full); // Line C1

tmp = get(); // Line C2

sem_post( & empty); // Line C3

printf("%d\n", tmp);

}

}

int main(int argc, char * argv[]) {

// ...

sem_init( & empty, 0, MAX); // MAX are empty

sem_init( & full, 0, 0); // 0 are full

//...

}

there is a bug

Let us now imagine that MAX is greater than 1 (say MAX=10). For this example, let us assume that there are multiple producers and multiple consumers. We now have a problem: a race condition. look more closely at the put() and get() code.

Imagine two producers (Pa and Pb) both calling into put() at roughly the same time. Assume producer Pa gets to run first, and just starts to fill the first buffer entry (fill=0 at Line F1). Before Pa gets a chance to increment the fill counter to 1, it is interrupted. Producer Pb starts to run, and at Line F1 it also puts its data into the 0th element of buffer, which means that the old data there is overwritten

Solution

The filling of a buffer and incrementing of the index into the buffer is a critical section

So let’s use our friend the binary semaphore and add some locks.

void * producer(void * arg) {

int i;

for (i = 0; i < loops; i++) {

sem_wait( & empty); // Line P1

sem_wait( & mutex); // Line P1.5 (MUTEX HERE)

put(i); // Line P2

sem_post( & mutex); // Line P2.5 (AND HERE)

sem_post( & full); // Line P3

}

}

void * consumer(void * arg) {

int i;

for (i = 0; i < loops; i++) {

sem_wait( & full); // Line C1

sem_wait( & mutex); // Line C1.5 (MUTEX HERE)

int tmp = get(); // Line C2

sem_post( & mutex); // Line C2.5 (AND HERE)

sem_post( & empty); // Line C3

printf("%d\n", tmp);

}

}

That seems like the right idea, but it also doesn’t work. Why? Deadlock.

Avoiding Deadlock

now that you figured it out, here is the answer. Imagine two threads, one producer and one consumer. The consumer gets to run first. It acquires the mutex (Line C0), and then calls sem wait() on the full semaphore (Line C1); because there is no data yet, this call causes the consumer to block and thus yield the CPU; importantly, though, the consumer still holds the lock.

A producer then runs. It has data to produce and if it were able to run, it would be able to wake the consumer thread and all would be good. Unfortunately, the first thing it does is call sem wait() on the binary mutex semaphore (Line P0). The lock is already held. Hence, the producer is now stuck waiting too

There is a simple cycle here. The consumer holds the mutex and is waiting for someone to signal full. The producer could signal full but is waiting for the mutex. Thus, the producer and consumer are each stuck waiting for each other: a classic deadlock.

At Last, A Working Solution To solve this problem, we simply must reduce the scope of the lock. Figure 31.12 (page 10) shows the correct solution. As you can see, we simply move the mutex acquire and release to be just around the critical section;

Reader-Writer Locks

For example, imagine a number of concurrent list operations, including inserts and simple lookups. While inserts change the state of the list (and thus a traditional critical section makes sense), lookups simply read the data structure; as long as we can guarantee that no insert is ongoing, we can allow many lookups to proceed concurrently

If some thread wants to update the data structure in question, it should call the new pair of synchronization operations: rwlock acquire writelock(), to acquire a write lock, and rwlock release writelock(), to release it.

typedef struct _rwlock_t {

sem_t lock; // binary semaphore (basic lock)

sem_t writelock; // allow ONE writer/MANY readers

int readers; // #readers in critical section

}

rwlock_t;

void rwlock_init(rwlock_t * rw) {

rw - > readers = 0;

sem_init( & rw - > lock, 0, 1);

sem_init( & rw - > writelock, 0, 1);

}

void rwlock_acquire_readlock(rwlock_t * rw) {

sem_wait( & rw - > lock);

rw - > readers++;

if (rw - > readers == 1) // first reader gets writelock

sem_wait( & rw - > writelock);

sem_post( & rw - > lock);

}

void rwlock_release_readlock(rwlock_t * rw) {

sem_wait( & rw - > lock);

rw - > readers--;

if (rw - > readers == 0) // last reader lets it go

sem_post( & rw - > writelock);

sem_post( & rw - > lock);

}

void rwlock_acquire_writelock(rwlock_t * rw) {

sem_wait( & rw - > writelock);

}

void rwlock_release_writelock(rwlock_t * rw) {

sem_post( & rw - > writelock);

}

Thus, once a reader has acquired a read lock, more readers will be allowed to acquire the read lock too; however, any thread that wishes to acquire the write lock will have to wait until all readers are finished; the last one to exit the critical section calls sem post() on “writelock” and thus enables a waiting writer to acquire the lock.

This approach works (as desired), but does have some negatives, especially when it comes to fairness. In particular, it would be relatively easy for readers to starve writers.

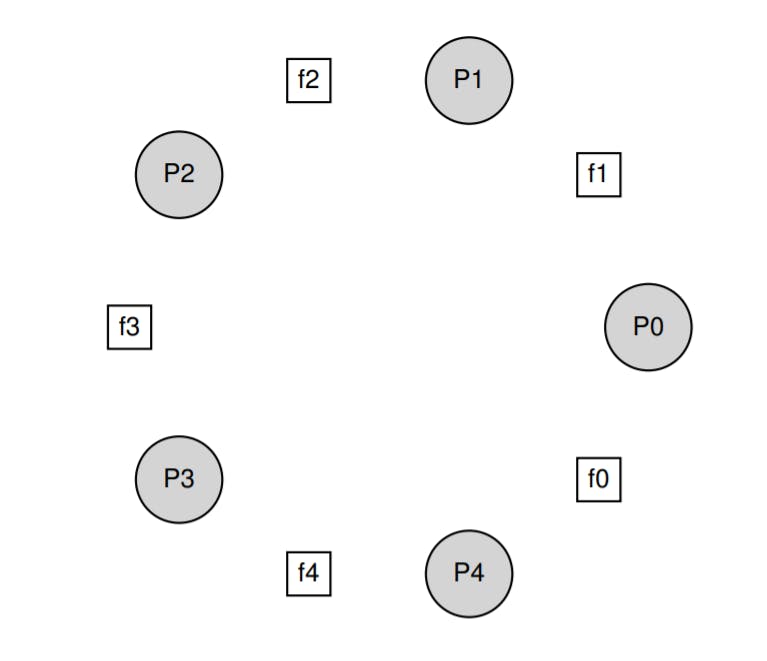

The Dining Philosophers

the problem is

assume there are five “philosophers” sitting around a table. Between each pair of philosophers is a single fork (and thus, five total). The philosophers each have times where they think and don’t need any forks, and times where they eat. In order to eat, a philosopher needs two forks, both the one on their left and the one on their right.

we need a solution such that there is no deadlock, no philosopher starves and never gets to eat, and concurrency is high (i.e., as many philosophers can eat at the same time as possible).

broken solution

void get_forks(int p) {

sem_wait( & forks[left(p)]);

sem_wait( & forks[right(p)]);

}

void put_forks(int p) {

sem_post( & forks[left(p)]);

sem_post( & forks[right(p)]);

}

to acquire the forks, we simply grab a “lock” on each one: first the one on the left

and then the one on the right. When we are done eating, we release them. Simple

Unfortunately, in this case, simple means broken. Can you see the problem that arises?

The problem is deadlock. If each philosopher happens to grab the fork on their left before any philosopher can grab the fork on their right, each will be stuck holding one fork and waiting for another, forever. Specifically, philosopher 0 grabs fork 0, philosopher 1 grabs fork 1, philosopher 2 grabs fork 2, philosopher 3 grabs fork 3, and philosopher 4 grabs fork 4; all the forks are acquired, and all the philosophers are stuck waiting for a fork that another philosopher possesses.

We’ll study deadlock in the next chapter

A Solution: Breaking The Dependency

void get_forks(int p) {

if (p == 4) {

sem_wait( & forks[right(p)]);

sem_wait( & forks[left(p)]);

} else {

sem_wait( & forks[left(p)]);

sem_wait( & forks[right(p)]);

}

}

The simplest way to attack this problem is to change how forks are acquired by at least one of the philosophers let’s assume that philosopher 4 (the highest numbered one) gets the forks in a different order than the others

Because the last philosopher tries to grab right before left, there is no situation where each philosopher grabs one fork and is stuck waiting for another; the cycle of waiting is broken.

Thread Throttling

One other simple use case for semaphores can cause a problem, The specific problem is this: how can a programmer prevent “too many” threads from doing something at once and bogging the system down?

Let’s consider a more specific example. Imagine that you create hundreds of threads to work on some problem in parallel. However, in a certain part of the code, each thread acquires a large amount of memory to perform part of the computation; let’s call this part of the code the memory-intensive region. If all of the threads enter the memory-intensive region at the same time, the sum of all the memory allocation requests will exceed the amount of physical memory on the machine. As a result, the machine will start thrashing (i.e., swapping pages to and from the disk), and the entire computation will slow to a crawl. A simple semaphore can solve this problem. By initializing the value of the semaphore to the maximum number of threads you wish to enter the memory-intensive region at once, and then putting a sem wait() and sem post() around the region, a semaphore can naturally throttle the number of threads that are ever concurrently in the dangerous region of the code.

decide upon a threshold for “too many”, and then use a semaphore to limit the number of threads concurrently executing the piece of code in question. We call this approach throttling

How To Implement Semaphores

Let’s use our low-level synchronization primitives, locks and condition variables, to build our own version of semaphores

we use just one lock and one condition variable, plus a state variable to track the value of the semaphore

typedef struct __Zem_t {

int value;

pthread_cond_t cond;

pthread_mutex_t lock;

}

Zem_t;

// only one thread can call this

void Zem_init(Zem_t * s, int value) {

s - > value = value;

Cond_init( & s - > cond);

Mutex_init( & s - > lock);

}

void Zem_wait(Zem_t * s) {

Mutex_lock( & s - > lock);

while (s - > value <= 0)

Cond_wait( & s - > cond, & s - > lock);

s - > value--;

Mutex_unlock( & s - > lock);

}

void Zem_post(Zem_t * s) {

Mutex_lock( & s - > lock);

s - > value++;

Cond_signal( & s - > cond);

Mutex_unlock( & s - > lock);

}

One subtle difference between our Zemaphore and pure semaphores as defined by Dijkstra is that we don’t maintain the invariant that the value of the semaphore, when negative, reflects the number of waiting threads; indeed, the value will never be lower than zero. This behavior is easier to implement and matches the current Linux implementation.

Summary

Semaphores are a powerful and flexible primitive for writing concurrent programs. Some programmers use them exclusively, shunning locks and condition variables, due to their simplicity and utility

![Operating Systems: Three Easy Pieces [Concurrency Ch.6: Semaphores]](https://cdn.hashnode.com/res/hashnode/image/upload/v1634688328135/5yKv1VkRN.jpeg?w=1600&h=840&fit=crop&crop=entropy&auto=compress,format&format=webp)