Locks

When you have a region of code that is a critical section, and thus needs to be protected to ensure correct operation, locks are quite useful. As an example, assume our critical section looks like this, incrementing shared variable:

balance = balance + 1; // critical section

other critical sections are possible, such as adding an element to a linked list or other more complex updates to shared structures(will discuss this in next chapter)

Now we need to ensure that any such critical section executes as if it were a single atomic instruction. so we need only one thread to be able to run the critical section without any interruption. A lock is just a variable, and thus to use one, you must declare a lock variable of some kind (such as mutex above). This lock variable (or just “lock” for short) holds the state of the lock at any instant in time. It is either available (or unlocked or free) and thus no thread holds the lock, or acquired (or locked or held), and thus exactly one thread holds the lock and presumably is in a critical section.

lock_t mutex; // some globally-allocated lock ’mutex’

...

lock(&mutex);

balance = balance + 1; // critical section

unlock(&mutex);

The semantics of the lock() and unlock() routines are simple. Calling the routine lock() tries to acquire the lock; 1) if no other thread holds the lock (i.e., it is free), the thread will acquire the lock and enter the critical section; Once the owner of the lock calls unlock(), the lock is now available (free) again. 2) if the lock is not free it will wait until the lock is free and then it will acquire the lock entering the critical section then unlocking the lock again. by putting a lock around a section of code, the programmer can guarantee that no more than a single thread can ever be active within that code.

Building A Lock

To build a working lock, we will need some help from our old friend, the hardware, as well as our good pal, the OS. Over the years, a number of different hardware primitives have been added to the instruction sets of various computer architectures; while we won’t study how these instructions are implemented (that, after all, is the topic of a computer architecture class), we will study how to use them in order to build a mutual exclusion primitive like a lock. Before building any locks, we should first understand what our goals are, To evaluate whether a lock works (and works well), we should ensure that:

1)Whether the lock does its basic task, which is to provide mutual exclusion

2)Fairness: Does each thread contending for the lock get a fair shot at acquiring it once it is free? Another way to look at this is by examining the more extreme case: does any thread contending for the lock starve while doing so.

3)performance: when a single thread is running and grabs and releases the lock, what is the overhead of doing so? Another is the case where multiple threads are contending for the lock on a single CPU(More than one thread try to acquire lock which is already locked by another thread).

Controlling Interrupts

One of the earliest solutions used to provide mutual exclusion was to disable interrupts for critical sections; this solution was invented for single-processor systems. Assume we are running on such a single-processor system.

void lock() {

DisableInterrupts();

}

void unlock() {

EnableInterrupts();

}

By turning off interrupts (using some kind of special hardware instruction) before entering a critical section, we ensure that the code inside the critical section will not be interrupted, and thus will execute as if it were atomic. When we are finished, we re-enable interrupts (again, via a hardware instruction) and thus the program proceeds as usual.(remember single-processor system)

The main positive of this approach is its simplicity, unfortunately many negatives which are:

First: this approach requires us to allow any calling thread to perform a privileged operation (turning interrupts on and off), and thus trust that this facility is not abused.

Second: the approach does not work on multiprocessors. If multiple threads are running on different CPUs, and each try to enter the same critical section, it does not matter whether interrupts are disabled; threads will be able to run on other processors, and thus could enter the critical section.

Third: turning off interrupts for extended periods of time can lead to interrupts becoming lost, which can lead to serious systems problems

Finally, and probably least important, this approach can be inefficient. Compared to normal instruction execution, code that masks or unmasks interrupts tends to be executed slowly by modern CPUs.

A Failed Attempt: Just Using Loads/Stores

To move beyond interrupt-based techniques, we will have to rely on CPU hardware and the instructions it provides us to build a proper lock. Let’s first try to build a simple lock by using a single flag variable.

typedef struct __lock_t {

int flag;

}

lock_t;

void init(lock_t * mutex) {

// 0 -> lock is available, 1 -> held

mutex - > flag = 0;

}

void lock(lock_t * mutex) {

while (mutex - > flag == 1) // TEST the flag

; // spin-wait (do nothing)

mutex - > flag = 1; // now SET it!

}

void unlock(lock_t * mutex) {

mutex - > flag = 0;

}

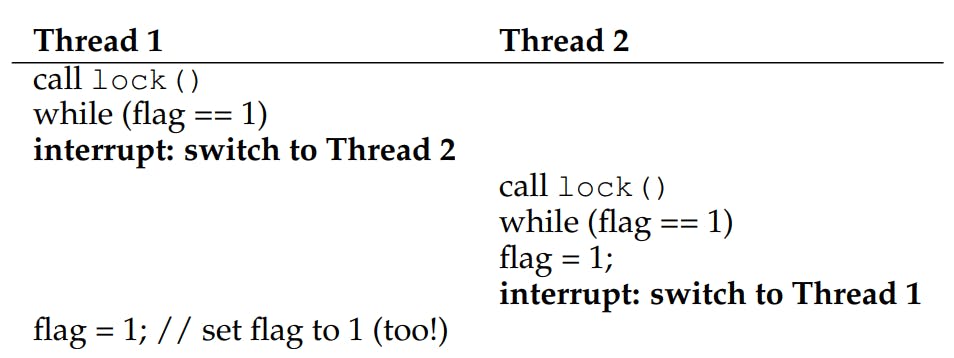

the idea is quite simple: use a simple variable (flag) to indicate whether some thread has possession of a lock. The first thread that enters the critical section will call lock(), tests whether the flag is equal to 1 (in this case, it is not), and then sets the flag to 1 to indicate that the thread now holds the lock. If another thread happens to call lock() while that first thread is in the critical section, it will simply spin-wait in the while loop for that thread to call unlock() and clear the flag. Unfortunately, the code has two problems: one of correctness, and another of performance

, we can easily produce a case where both threads set the flag to 1 and both threads are thus able to enter the critical section. This behavior is what professionals call “bad” – we have obviously failed to provide the most basic requirement: providing mutual exclusion.

The performance problem, which we will address more later on, is the fact that the way a thread waits to acquire a lock that is already held: it endlessly checks the value of flag, a technique known as spin-waiting. Spin-waiting wastes time waiting for another thread to release a lock. The waste is exceptionally high on a uniprocessor.

Building Working Spin Locks with Test-And-Set

we need to relay on hardware primitives now. Because disabling interrupts does not work on multiple processors, and because simple approaches using loads and stores (as shown above) don’t work, system designers started to invent hardware support for locking.

The simplest bit of hardware support to understand is known as a test-and-set (or atomic exchange) instruction.

int TestAndSet(int * old_ptr, int new) {

int old = * old_ptr; // fetch old value at old_ptr

* old_ptr = new; // store ’new’ into old_ptr

return old; // return the old value

}

What the test-and-set instruction does is as follows. It returns the old value pointed to by the old ptr, and simultaneously updates said value to new. The key, of course, is that this sequence of operations is performed atomically. The reason it is called “test and set” is that it enables you to “test” the old value (which is what is returned) while simultaneously “setting” the memory location to a new value; as it turns out, this slightly more powerful instruction is enough to build a simple spin lock

typedef struct __lock_t {

int flag;

}

lock_t;

void init(lock_t * lock) {

// 0: lock is available, 1: lock is held

lock - > flag = 0;

}

void lock(lock_t * lock) {

while (TestAndSet( & lock - > flag, 1) == 1)

; // spin-wait (do nothing)

}

void unlock(lock_t * lock) {

lock - > flag = 0;

}

As explanation for the cases imagine first the case where a thread calls lock() and no other thread currently holds the lock; thus, flag should be 0. When the thread calls TestAndSet(flag, 1), the routine will return the old value of flag, which is 0; thus, the calling thread, which is testing the value of flag, will not get caught spinning in the while loop and will acquire the lock. The thread will also atomically set the value to 1, thus indicating that the lock is now held. When the thread is finished with its critical section, it calls unlock() to set the flag back to zero.

The second case we can imagine arises when one thread already has the lock held (i.e., flag is 1). In this case, this thread will call lock() and then call TestAndSet(flag, 1) as well. This time, TestAndSet() will return the old value at flag, which is 1 (because the lock is held), while simultaneously setting it to 1 again. As long as the lock is held by another thread, TestAndSet() will repeatedly return 1, and thus this thread will spin and spin until the lock is finally released. When the flag is finally set to 0 by some other thread, this thread will call TestAndSet() again, which will now return 0 while atomically setting the value to 1 and thus acquire the lock and enter the critical section

NOTE: You may also now understand why this type of lock is usually referred to as a spin lock. It is the simplest type of lock to build, and simply spins, using CPU cycles, until the lock becomes available. To work correctly on a single processor, it requires a preemptive scheduler (i.e., one that will interrupt a thread via a timer, in order to run a different thread, from time to time). Without preemption, spin locks don’t make much sense on a single CPU, as a thread spinning on a CPU will never relinquish it.

Evaluating Spin Locks

The most important aspect of a lock is correctness: does it provide mutual exclusion? The answer here is yes: the spin lock only allows a single thread to enter the critical section at a time.

fairness. How fair is a spin lock to a waiting thread? Can you guarantee that a waiting thread will ever enter the critical section? The answer here, unfortunately, is bad news: spin locks don’t provide any fairness guarantees.

performance: For spin locks, in the single CPU case, performance overheads can be quite painful; imagine the case where the thread holding the lock is preempted within a critical section. The scheduler might then run every other thread (imagine there are N − 1 others), each of which tries to acquire the lock. on multiple CPUs, spin locks work reasonably well (if the number of threads roughly equals the number of CPUs).

Compare-And-Swap

Another hardware primitive that some systems provide is known as the compare-and-swap instruction

int CompareAndSwap(int * ptr, int expected, int new) {

int original = * ptr;

if (original == expected)

*

ptr = new;

return original;

}

The basic idea is for compare-and-swap to test whether the value at the address specified by ptr is equal to expected; if so, update the memory location pointed to by ptr with the new value. If not, do nothing. In either case, return the original value at that memory location, thus allowing the code calling compare-and-swap to know whether it succeeded or not. The rest of the code is the same as the test-and-set example above. This code works quite similarly; it simply checks if the flag is 0 and if so, atomically swaps in a 1 thus acquiring the lock

Load-Linked and Store-Conditional

The load-linked operates much like a typical load instruction, and simply fetches a value from memory and places it in a register. The key difference comes with the store-conditional, which only succeeds (and updates the value stored at the address just load-linked from) if no intervening store to the address has taken place. In the case of success, the storeconditional returns 1 and updates the value at ptr to value; if it fails, the value at ptr is not updated and 0 is returned.

int LoadLinked(int * ptr) {

return *ptr;

}

int StoreConditional(int * ptr, int value) {

if (no update to * ptr since LoadLinked to this address) {

* ptr = value;

return 1; // success!

} else {

return 0; // failed to update

}

}

The lock() code is the only interesting piece. First, a thread spins waiting for the flag to be set to 0 (and thus indicate the lock is not held). Once so, the thread tries to acquire the lock via the store-conditional; if it succeeds, the thread has atomically changed the flag’s value to 1 and thus can proceed into the critical section.

void lock(lock_t * lock) {

while (1) {

while (LoadLinked( & lock - > flag) == 1)

; // spin until it’s zero

if (StoreConditional( & lock - > flag, 1) == 1)

return; // if set-it-to-1 was a success: all done

// otherwise: try it all over again

}

}

void unlock(lock_t * lock) {

lock - > flag = 0;

}

Note how failure of the store-conditional might arise. One thread calls lock() and executes the load-linked, returning 0 as the lock is not held( the if condition on line 5).

The key feature of these instructions is that only one of these threads will succeed in updating the flag to 1 and thus acquire the lock; the second thread to attempt the store-conditional will fail (because the other thread updated the value of flag between its load-linked and storeconditional) and thus have to try to acquire the lock again.

Fetch-And-Add

One final hardware primitive is the fetch-and-add instruction, which atomically increments a value while returning the old value at a particular address.

int FetchAndAdd(int * ptr) {

int old = * ptr;

* ptr = old + 1;

return old;

}

this primitive can be used to build ticket lock, simply initialize each variable to somenumber(lets say threadturn) a number from 1 to n, lets say that current number(curturn) is k, when some thread (i-th) try to acquire the lock we will check if the curturn == threadturn if thats true we will increment curturn and let the thread acquire otherwise we will wait until the condition met.

typedef struct __lock_t {

int ticket;

int turn;

}

lock_t;

void lock_init(lock_t * lock) {

lock - > ticket = 0;

lock - > turn = 0;

}

void lock(lock_t * lock) {

int myturn = FetchAndAdd( & lock - > ticket);

while (lock - > turn != myturn)

; // spin

}

void unlock(lock_t * lock) {

lock - > turn = lock - > turn + 1;

}

Note one important difference with this solution versus our previous attempts: it ensures progress for all threads. Once a thread is assigned its ticket value, it will be scheduled at some point in the future (once those in front of it have passed through the critical section and released the lock). In our previous attempts, no such guarantee existed; a thread spinning on test-and-set (for example) could spin forever even as other threads acquire and release the lock.

we can see that there is too much spinning. thread 1 won’t have to spin so much and will be able to acquire the lock. Thus, any time a thread gets caught spinning in a situation like this, it wastes an entire time slice doing nothing but checking a value that isn’t going to change! The problem gets worse with N threads contending for a lock; N − 1 time slices may be wasted in a similar manner, simply spinning and waiting for a single thread to release the lock. Hardware support alone cannot solve the problem. We’ll need OS support too!

Just Yield

Our first try is a simple and friendly approach: when you are going to spin, instead give up the CPU to another thread.

void init() {

flag = 0;

}

void lock() {

while (TestAndSet( & flag, 1) == 1)

yield(); // give up the CPU

}

void unlock() {

flag = 0;

}

In this approach, we assume an operating system primitive yield() which a thread can call when it wants to give up the CPU and let another thread run. A thread can be in one of three states (running, ready, or blocked); yield is simply a system call that moves the caller from the running state to the ready state, and thus promotes another thread to running. Thus, the yielding thread essentially deschedules itself.

In this approach, we assume an operating system primitive yield() which a thread can call when it wants to give up the CPU and let another thread run. A thread can be in one of three states (running, ready, or blocked); yield is simply a system call that moves the caller from the running state to the ready state, and thus promotes another thread to running. Thus, the yielding thread essentially deschedules itself.

Let us now consider the case where there are many threads (say 100) contending for a lock repeatedly. In this case, if one thread acquires the lock and is preempted before releasing it, the other 99 will each call lock(), find the lock held, and yield the CPU. Assuming some kind of round-robin scheduler, each of the 99 will execute this run-and-yield pattern before the thread holding the lock gets to run again. While better than our spinning approach (which would waste 99 time slices spinning), this approach is still costly; the cost of a context switch can be substantial, and there is thus plenty of waste. A thread may get caught in an endless yield loop while other threads repeatedly enter and exit the critical section. We clearly will need an approach that addresses this problem directly.

Using Queues: Sleeping Instead Of Spinning

. The scheduler determines which thread runs next; if the scheduler makes a bad choice, a thread runs that must either spin waiting for the lock (our first approach), or yield the CPU immediately (our second approach). Thus, we must explicitly exert some control over which thread next gets to acquire the lock after the current holder releases it. To do this, we will need a little more OS support, as well as a queue to keep track of which threads are waiting to acquire the lock. park() to put a calling thread to sleep, and unpark(threadID) to wake a particular thread as designated by threadID. These two routines can be used in tandem to build a lock that puts a caller to sleep if it tries to acquire a held lock and wakes it when the lock is free.

typedef struct __lock_t {

int flag;

int guard;

queue_t * q;

}

lock_t;

void lock_init(lock_t * m) {

m - > flag = 0;

m - > guard = 0;

queue_init(m - > q);

}

void lock(lock_t * m) {

while (TestAndSet( & m - > guard, 1) == 1)

; //acquire guard lock by spinning

if (m - > flag == 0) {

m - > flag = 1; // lock is acquired

m - > guard = 0;

} else {

queue_add(m - > q, gettid());

m - > guard = 0;

park();

}

}

void unlock(lock_t * m) {

while (TestAndSet( & m - > guard, 1) == 1)

; //acquire guard lock by spinning

if (queue_empty(m - > q))

m - > flag = 0; // let go of lock; no one wants it

else

unpark(queue_remove(m - > q)); // hold lock

// (for next thread!)

m - > guard = 0;

}

We do a couple of interesting things in this example. First, we combine the old test-and-set idea with an explicit queue of lock waiters to make a more efficient lock. Second, we use a queue to help control who gets the lock next and thus avoid starvation.

You might notice how the guard is used, basically as a spin-lock around the flag and queue manipulations the lock is using. a thread might be interrupted while acquiring or releasing the lock, and thus cause other threads to spin-wait for this one to run again. However, the time spent spinning is quite limited.

You might also observe that in lock(), when a thread can not acquire the lock (it is already held), we are careful to add ourselves to a queue (by calling the gettid() function to get the thread ID of the current thread),set guard to 0, and yield the CPU

You might further detect that the flag does not get set back to 0 when another thread gets woken up. Why is this? Well, it is not an error, but rather a necessity! When a thread is woken up, it will be as if it is returning from park(); however, it does not hold the guard at that point in the code and thus cannot even try to set the flag to 1. Thus, we just pass the lock directly from the thread releasing the lock to the next thread acquiring it; flag is not set to 0 in-between.

Finally, you might notice the perceived race condition in the solution, just before the call to park(). With just the wrong timing, a thread will be about to park, assuming that it should sleep until the lock is no longer held. A switch at that time to another thread (say, a thread holding the lock) could lead to trouble, for example, if that thread then released the lock. The subsequent park by the first thread would then sleep forever (potentially), a problem sometimes called the wakeup/waiting race. solving this problem by adding a third system call: setpark(). By calling this routine, a thread can indicate it is about to park. If it then happens to be interrupted and another thread calls unpark before park is actually called, the subsequent park returns immediately instead of sleeping. The code modification, inside of lock() we will make an update in the code

the new code will be:

typedef struct __lock_t {

int flag;

int guard;

queue_t * q;

}

lock_t;

void lock_init(lock_t * m) {

m - > flag = 0;

m - > guard = 0;

queue_init(m - > q);

}

void lock(lock_t * m) {

while (TestAndSet( & m - > guard, 1) == 1)

; //acquire guard lock by spinning

if (m - > flag == 0) {

m - > flag = 1; // lock is acquired

m - > guard = 0;

} else {

queue_add(m->q, gettid());

setpark(); // new code

m->guard = 0;

}

}

void unlock(lock_t * m) {

while (TestAndSet( & m - > guard, 1) == 1)

; //acquire guard lock by spinning

if (queue_empty(m - > q))

m - > flag = 0; // let go of lock; no one wants it

else

unpark(queue_remove(m - > q)); // hold lock

// (for next thread!)

m - > guard = 0;

}

Last thing we need to consider is if there is any OS library to help us so we don't invent the wheel, sometimes some OS provide us with thread library that is more efficient than our implementation

Fortunately Linux provides a futex, sleep or wake can be taught but with futex it provides more in-kernel functionality that each futex can easily sleep or wake by providing the address

Specifically, two calls are available.

The call to futex wait(address, expected) puts the calling thread to sleep, assuming the value at address is equal to expected. If it is not equal, the call returns immediately.

The call to the routine futex wake(address) wakes one thread that is waiting on the queue

That's the end of the chapter the implementation of park is also hidden from the book so we only focus on the concurrency part without thinking too much on the implementation

![Operating Systems: Three Easy Pieces [Concurrency Ch.3: Locks]](https://cdn.hashnode.com/res/hashnode/image/upload/v1634308639158/yyOf34O1t.jpeg?w=1600&h=840&fit=crop&crop=entropy&auto=compress,format&format=webp)