Operating Systems: Three Easy Pieces [Concurrency Ch.1: Concurrency and Threads]

Operating Systems: Three Easy Pieces consists of 3 "Easy Pieces" one of them is Concurrency so I decided to summarizes this part of the book. This chapter discuss what is threads and why they are useful besides some important concepts like critical section, data race and atomic operation.

What is thread

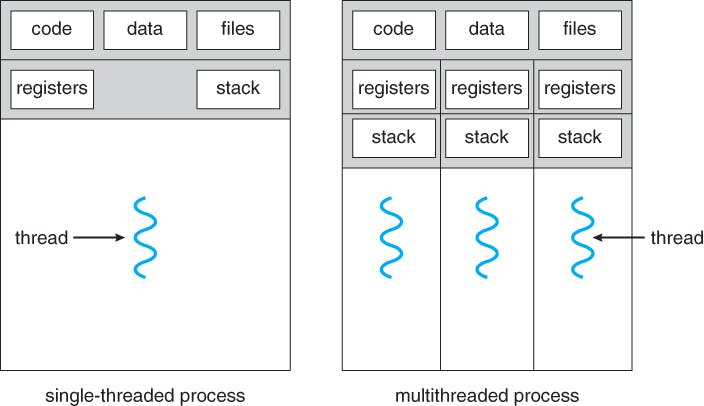

instead of our classic view of a single point of execution within a program (i.e., a single PC where instructions are being fetched from and executed), a multi-threaded program has more than one point of execution, another way to think of this is that each thread is very much like a separate process, except for one difference: they share the same address space and thus can access the same data

Each thread has its own private set of registers it uses for computation; thus, if there are two threads that are running on a single processor, when switching from running one (T1) to running the other (T2), a context switch must take place.

The context switch between threads is quite similar to the context switch between processes, as the register state of T1 must be saved and the register state of T2 restored before running T2.

A major difference between threads and processes concerns the stack. However, in a multi-threaded process, each thread runs independently and of course may call into various routines to do whatever work it is doing. Instead of a single stack in the address space, there will be one per thread.

Why threads

Imagine you are writing a program that performs operations on very large arrays, for example, adding two large arrays together, or incrementing the value of each element in the array by some amount if you are executing the program on a system with multiple processors, you have the potential of speeding up this process considerably by using the processors to each perform a portion of the work.

Another good reason is to avoid blocking program progress due to slow I/O, Imagine that you are writing a program that performs different types of I/O: either waiting to send or receive a message, for an explicit disk I/O to complete, or even (implicitly) for a page fault to finish. Instead of waiting, your program may wish to do something else, including utilizing the CPU to perform computation, or even issuing further I/O requests. Using threads is a natural way to avoid getting stuck

NOTE: The task of transforming your standard single-threaded program into a program that does this sort of work on multiple CPUs is called parallelization Now lets dig in and create our first thread

Thread Creation

We will create 2 threads, T1 and its name will be "A" and T2 and its name will be "B" each one of them will print their given name in concurrency way

#include <stdio.h>

#include <stdlib.h>

#include <pthread.h>

void *mythread(void *arg) {

printf("%s\n", (char *) arg);

return NULL;

}

int main(int argc, char *argv[]) {

pthread_t t1, t2;

printf("main: begin\n");

pthread_create(&t1, NULL, mythread, "A");

pthread_create(&t2, NULL, mythread, "B");

// join waits for the threads to finish

pthread_join(t1, NULL);

pthread_join(t2, NULL);

printf("main: end\n");

return 0;

}

After creating the two threads (let’s call them T1 and T2), the main thread calls pthread join(), which waits for a particular thread to complete. It does so twice, thus ensuring T1 and T2 will run and complete before finally allowing the main thread to run again.

Once a thread is created, it may start running right away, alternately it may be put in a “ready” but not “running” state and thus not run yet, but let’s not worry about this possibility quite yet.

Let us examine the possible execution ordering of this little program

First possibility:

| main | Thread 1 | Thread 2 |

|---|---|---|

| starts running | ||

| prints “main: begin” | ||

| creates Thread 1 | ||

| creates Thread 2 | ||

| waits for T1 | ||

| runs | ||

| Prints "A" | ||

| returns | ||

| waits for T2 | ||

| runs | ||

| Prints "B" | ||

| returns | ||

| prints “main: end” |

in this trace thread A done and then thread B but the scheduler doesn't always excite threads in order they written in the code, Remember that: the 2 threads will be at least in ready mode and they maybe running in the same time. lets trace another example

Second possibility:

| main | Thread 1 | Thread 2 |

|---|---|---|

| starts running | ||

| prints “main: begin” | ||

| creates Thread 1 | ||

| creates Thread 2 | ||

| runs | ||

| Prints "B" | ||

| returns | ||

| waits for T1 | ||

| runs | ||

| Prints "A" | ||

| returns | ||

| waits for T2 But its returns returns immediately; T2 is done | ||

| prints “main: end” |

What runs next is determined by the OS scheduler so it is hard to know what will run at any given moment in time, you need to be careful when write concurrency code.

Shared Data

Remember what we said about why threads are important, incrementing variable by one can be faster if we wrote concurrency code, now lets write code so our variable "counter" will be 20000000(2e7) with 2 threads. So we can run each thread to increment the counter variable by 10000000(1e7)

#include <stdio.h>

#include <stdlib.h>

#include <pthread.h>

int max;

volatile int counter = 0; // shared global variable

void *mythread(void *arg) {

int i; // stack (private per thread)

for (i = 0; i < max; i++) {

counter = counter + 1; // shared: only one

}

return NULL;

}

int main(int argc, char *argv[]) {

max = atoi(argv[1]);

pthread_t p1, p2;

pthread_create(&p1, NULL, mythread, "A");

pthread_create(&p2, NULL, mythread, "B");

// join waits for the threads to finish

pthread_join(p1, NULL);

pthread_join(p2, NULL);

printf("main: done\n [counter: %d]\n [should: %d]\n",

counter, max*2);

return 0;

}

So we expect to run this code and get the final result is 20000000

Unfortunately, when we run this code, even on a single processor, we don’t necessarily get the desired result. Sometimes, we get:

main: done with both (counter = 19345221)

try to run it again maybe we can get our desire value but output may be

main: done with both (counter = 19221041)

Not only is each run wrong, but also yields a different result! A big

question remains: why does this happen?

Data Race(Race Conditions)

To understand why this happens, we must understand the code sequence that the compiler generates for the update to counter. In this case, we wish to simply add a number (1) to counter. Thus, the code sequence for doing so might look something like this (in x86);

mov 0x8049a1c, %eax

add $0x1, %eax

mov %eax, 0x8049a1c

This example assumes that the variable counter is located at address 0x8049a1c. In this three-instruction sequence, the x86 mov instruction is used first to get the memory value at the address and put it into register eax. Then, the add is performed, adding 1 (0x1) to the contents of the eax register, and finally, the contents of eax are stored back into memory at the same address.

Let us imagine one (Thread 1) enters this region of code, and is thus about to increment counter by one. It loads the value of counter (let’s say it’s 50 to begin with) into its register eax. Thus, eax=50 for Thread 1. Then it adds one to the register; thus eax=51. Now, something unfortunate happens: a timer interrupt goes off; thus, the OS saves the state of the currently running thread.

OS Thread1 Eax Counter

before critical section 0 50

mov 8049a1c,%eax 50 50

add $0x1,%eax 51 50

Now something worse happens: Thread 2 is chosen to run, and it enters this same piece of code. It also executes the first instruction, getting the value of counter and putting it into its eax, The value of counter is still 50 at this point, and thus Thread 2 has eax=50, Then incrementing eax by 1 (thus eax=51), and then saving the contents of eax into counter (address 0x8049a1c). Thus, the global variable counter now has the value 51.

interrupt

save T1

restore T2

OS Thread2 Eax Counter

before critical section 0 50

mov 8049a1c,%eax 50 50

add $0x1,%eax 51 50

mov %eax,8049a1c 51 51

Finally, another context switch occurs, and Thread 1 resumes running. Recall that it had just executed the mov and add, and is now about to perform the final mov instruction. Recall also that eax=51. Thus, the final mov instruction executes, and saves the value to memory; the counter is set to 51 again.

interrupt

save T2

restore T1

OS Thread1 Eax Counter

mov %eax,8049a1c 51 51

Sadly our counter will be 51 not 52. What we have demonstrated here is called a race condition (or, more specifically, a data race),With some bad luck (i.e., context switches that occur at untimely points in the execution), we get the wrong result.

So, Because multiple threads executing this code can result in a race condition, we call this code a critical section. A critical section is a piece of code that accesses a shared variable (or more generally, a shared resource) and must not be concurrently executed by more than one thread.

What we really want for this code is what we call mutual exclusion. This property guarantees that if one thread is executing within the critical section, the others will be prevented from doing so.

Atomicity Operations

Atomically, means “as a unit”, which sometimes we take as “all or none.” it should either appear as if all of the actions you wish to group together occurred, or that none of them occurred.

we need to enter our critical section in atomically sequence so no two threads will be in the same critical section at the same time which we will know that deeper in the next chapters

So we reached the end, hope you find anything useful :) feel free to give a feedback

![Operating Systems: Three Easy Pieces [Concurrency Ch.1: Concurrency and Threads]](https://cdn.hashnode.com/res/hashnode/image/upload/v1633721337573/Qgry38Lcq.jpeg?w=1600&h=840&fit=crop&crop=entropy&auto=compress,format&format=webp)